- GTIG finds menace actors are cloning mature AI fashions using distillation assaults

- Refined malware can use AI to manipulate code in actual time to keep away from detection

- State-sponsored teams are creating extremely convincing phishing kits and social engineering campaigns

Should you’ve used any fashionable AI instruments, you’ll know they could be a nice assist in decreasing the tedium of mundane and burdensome duties.

Effectively, it seems menace actors really feel the identical means, as the newest Google Threat Intelligence Group AI Threat Tracker report has discovered that attackers are using AI greater than ever.

From determining how AI fashions motive so as to clone them, to integrating it into assault chains to bypass conventional network-based detection, GTIG has outlined a few of the most urgent threats – here is what they discovered.

How menace actors use AI in assaults

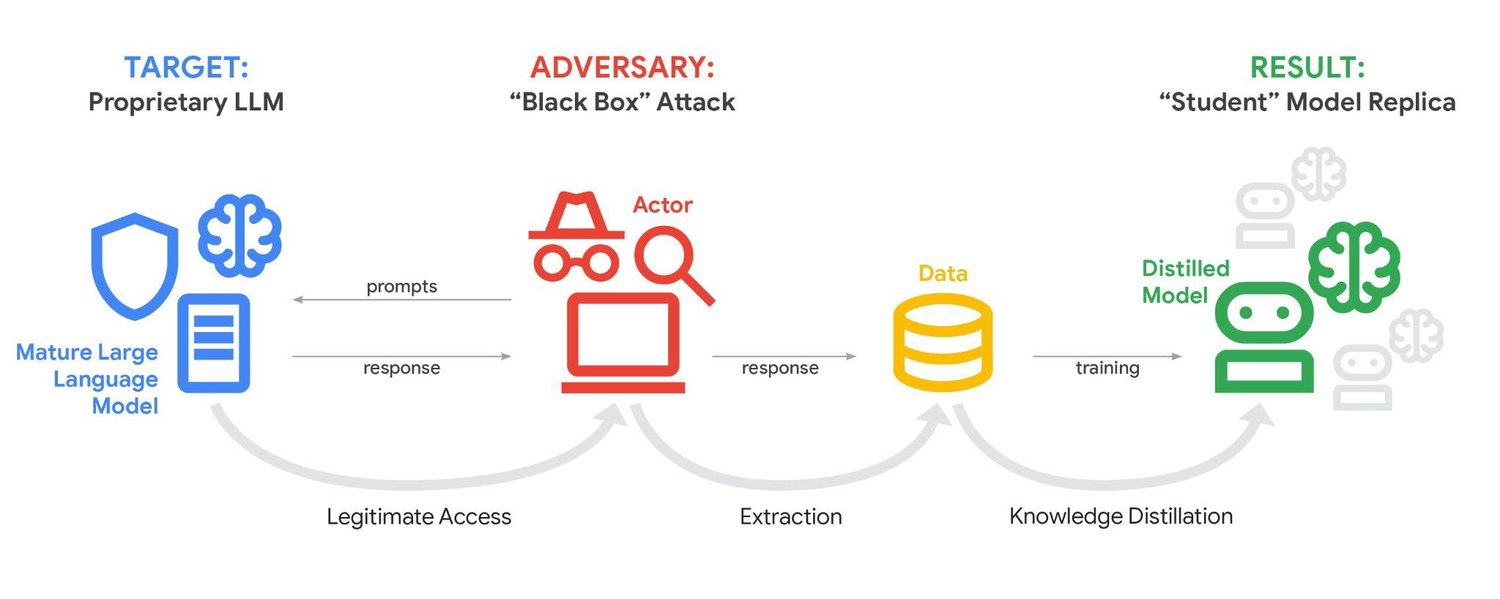

For starters, GTIG discovered menace actors are more and more using ‘distillation assaults’ to rapidly clone giant language fashions in order that they can be utilized by menace actors for their very own functions. Attackers will use an enormous quantity of prompts to discover out how the LLM causes with queries, and then use the responses to prepare their very own mannequin.

Attackers can then use their very own mannequin to keep away from paying for the respectable service, use the distilled mannequin to analyze how the LLM is constructed, or seek for methods to exploit their very own mannequin which will also be used to exploit the respectable service.

AI is additionally getting used to assist intelligence gathering and social engineering campaigns. Each Iranian and North Korean state-sponsored teams have utilized AI instruments in this means, with the previous using AI to collect data on enterprise relationships so as to create a pretext for contact, and the latter using AI to amalgamate intelligence to assist plan assaults.

GTIG has additionally noticed an increase in AI utilization for creating extremely convincing phishing kits for mass-distribution so as to harvest credentials.

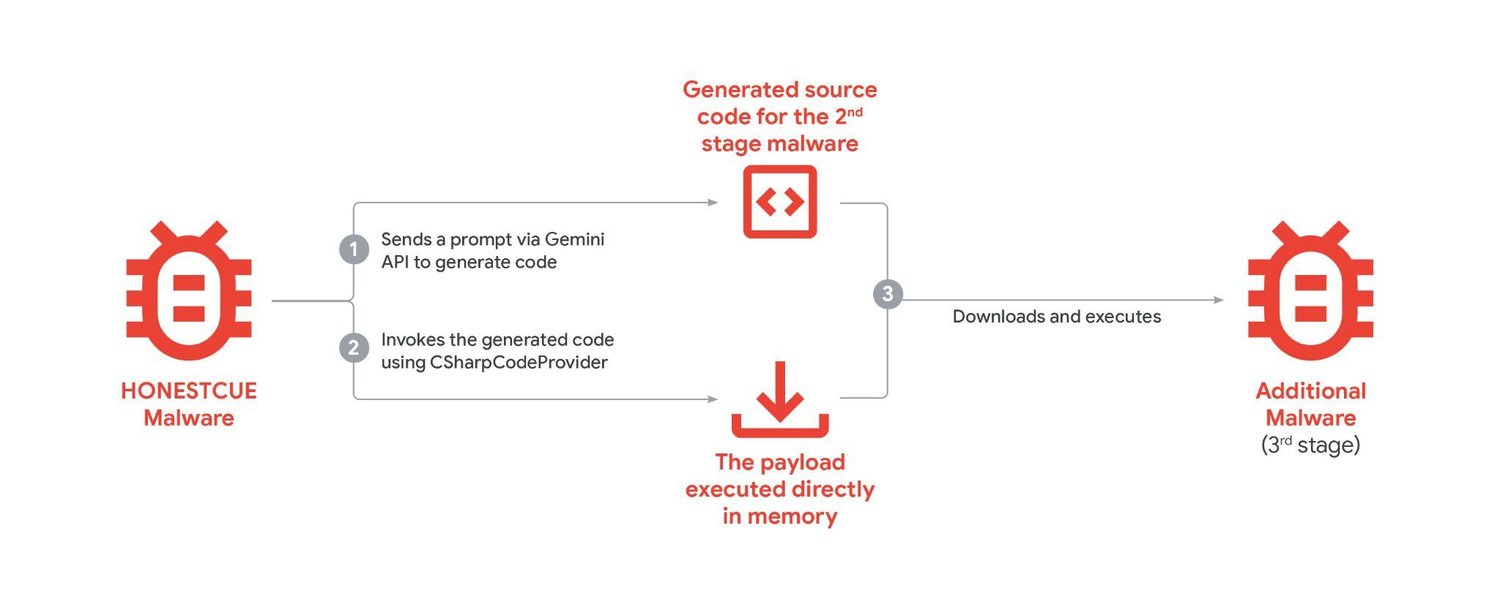

Furthermore, some menace actors are integrating AI-models into malware to enable it to adapt to keep away from detection. One instance, tracked as HONESTCUE, dodged network-based detection and static evaluation by using Gemini to re-write and execute code throughout an assault.

However not all menace actors are alike. GTIG has additionally famous that there is a critical demand for customized AI instruments constructed for attackers, with particular requires instruments able to writing code for malware. For now, attackers are reliant on using distillation assaults to create customized fashions to use offensively.

But when such instruments have been to develop into broadly obtainable and straightforward to distribute, it is possible that menace actors would rapidly undertake malicious AI into assault vectors to enhance the efficiency of malware, phishing, and social engineering campaigns.

So as to defend in opposition to AI-augmented malware, many safety options are deploying their very own AI instruments to battle again. Somewhat than counting on static evaluation, AI can be utilized to analyze potential threats in actual time to acknowledge the conduct of AI-augmented malware.

AI is additionally being employed to scan emails and messages so as to spot phishing in actual time at a scale that might require hundreds of hours of human work.

Furthermore, Google is actively looking for out probably malicious AI utilization in Gemini, and has deployed a instrument to assist hunt down software program vulnerabilities (Massive Sleep), and a instrument to assist in patching vulnerabilities (CodeMender).

The perfect antivirus for all budgets

Source link

#Threat #actors #distillation #clone #fashions #works