Until you take curation of your social feeds very critically, the tidal wave of AI slop images and movies has felt nigh inescapable. Extra worrying nonetheless is how usually this crashing tide serves to spotlight which of our family members wrestle to discern AI-generated content material from all the slop made by human fingers. Even worse nonetheless, one can usually discover oneself fooled by a picture spat out by a generative mannequin.

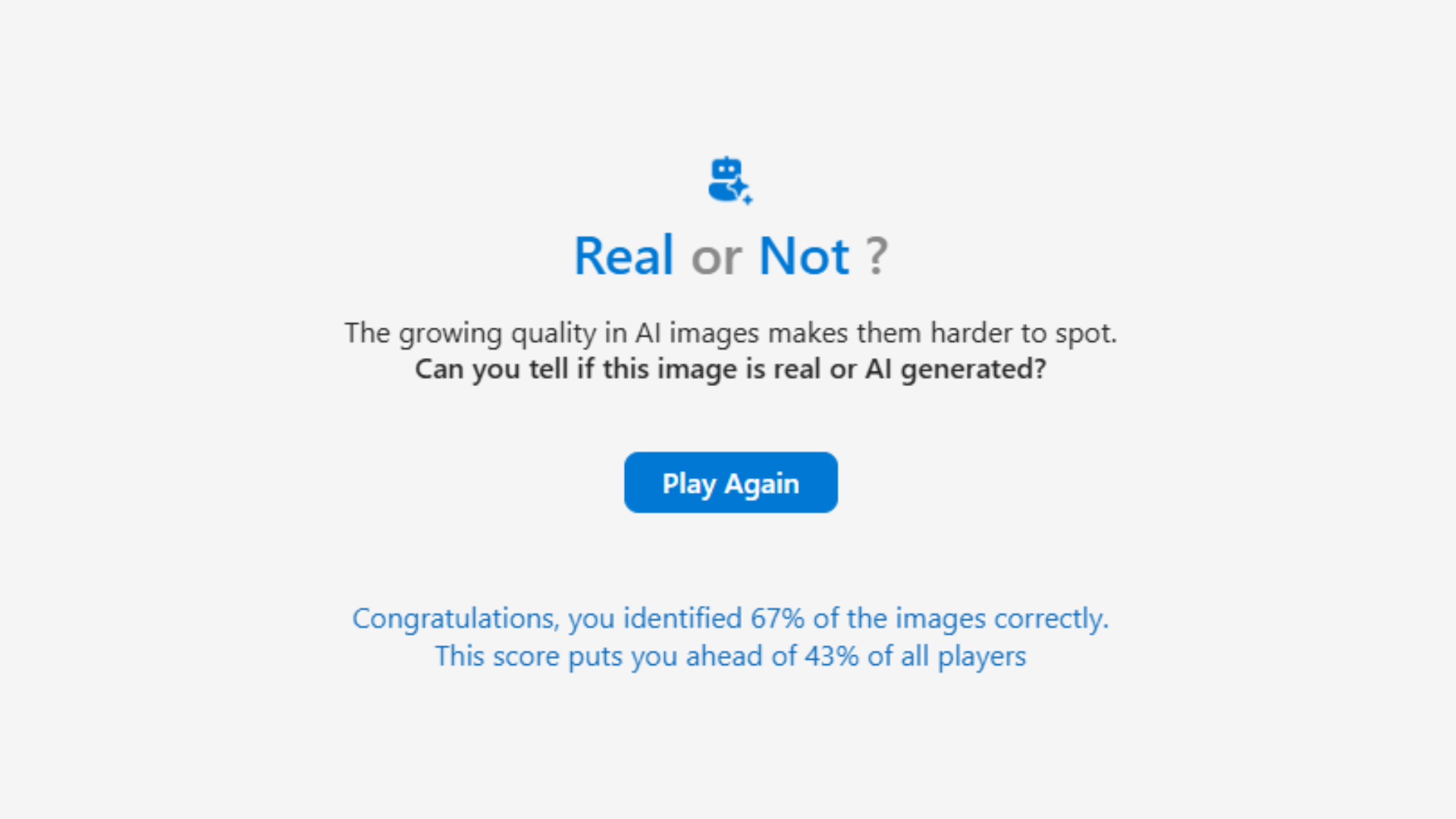

Sound acquainted? Seems it isn’t simply you. In line with a brand new study out of Microsoft’s AI for Good analysis lab, folks can precisely discern between real and AI-generated images solely 62% of the time. I suppose that is nonetheless higher than merely flipping a coin, however that is nonetheless fairly bleak.

This determine is predicated on “287,000 picture evaluations” from 12,500 individuals tasked with labelling a variety of images. Contributors assessed roughly 22 footage every, chosen from a sizeable financial institution that included each a “assortment of 350 copyright free ‘real’ images” plus “700 diffusion-based images utilizing DallE-3, Secure diffusion-3, Secure diffusion XL, Secure diffusion XL inpaintings, Amazon Titan v1 and Midjourney v6.” If you fancy a humbling expertise, you can give the identical ‘real or not real’ quiz a go your self.

The Microsoft analysis lab then uncovered their very own in-development AI detector to the identical financial institution of images, sharing that this instrument was in a position to precisely establish artificially generated images not less than 95% of the time.

I perceive that Microsoft is an enormous, many-limbed firm with pursuits throughout the place, however it does really feel bizarre for his or her analysis lab to spotlight an issue that different components of the firm has arguably fuelled—not to mention for some half of Microsoft to be appearing like, ‘however don’t be concerned, we’ll quickly have a instrument for that.’

To their credit score, the analysis workforce does additionally name for “extra transparency in the case of generative AI,” arguing that further measures like “content material credentials and watermarks are needed to tell the public about the nature of the media they devour.”

Nevertheless, it is easy sufficient to crop round a watermark, and I’ve little question these sufficiently motivated to do so will discover a approach round the equally proposed measures of digital signatures and content material credentials.

A multi-pronged method to AI-detection positively does not damage—particularly because it’s clear we have to graduate past merely a suspicious publish and going ‘it is giving AI vibes.’ However whereas we’re growing such an AI-detection instrument package, possibly we can additionally handle AI’s bottomless urge for food for energy, in addition to its ensuing greenhouse fuel emissions and affect on native communities. Only a thought!

Finest gaming PC 2025

Source link

#Microsoft #study #suggests #folks #difference #real #AIgenerated #images #timebut